Jagadish setti

Learning Curve for Oracle Applications Technical and Web Applications

Friday, March 26, 2010

Performance Tunning

SQL Trace File--Performance Tunning

Hi All,Quite often we come across tunning the queires...This is the area where most people find it difficult to understand ..The blind rule every one says check the explain plan and try to remove the full table scans...most of times it works to a extent..

For really tunning to the best u need to understand what oracle is doing to the data you require or perform the transactions you have issued..

For that purpose oracle has give sqltrace utility which will help you to figure out what all operations are getting performed and the way and time taken by each of them

First and foremost we will see what all setups required(mostly done by DBA..but nice to know things)

lets try to answer some simple questions

1.At what level we can enable the trace??

Both at the system level and session level

2.How to know the sessions and enable the trace?

select username, sid, serial#, paddr from v$session where username='APPS'

USERNAME SID SERIAL# PADDR------------------------------ --------- --------- --------APPS 372 313 4308F6F8APPS 373 27 43092A70APPS 375 36 430924B8APPS 376 184 4308DA60APPS 377 334 4308FCB0APPS 378 102 4308A6E8APPS 380 24 43091948APPS 381 12823 4308F140APPS 382 5297 4308EB88

To enable the trace for any one of the sessions

EXECUTE DBMS_SYSTEM.SET_SQL_TRACE_IN_SESSION(

3.Where are the trace files getting stored??

you find the trace files into

Normally trace file are difficult to handle..so we use the tkprof utility

tkprof tracefile.trc t1 RECORD=sqlfile.sql

in other case they are stored in the directory specified in user_dump_dest parameter in the init.ora file

4.What does SQLTrace utility provides??

The diagnostic tool 'sql trace' provides performance information about individual SQL statements and generates the following statistics foreach statement:

* parse, execute, and fetch counts

* CPU and elapsed times

* physical reads and logical reads

* number of rows processed

* misses on the library cache

This information is input to a trace (.trc) file and sql trace can be enabled/disabled for a session or an instance

5.What all setups need to be done for enabling trace in a instance??

The following parameters need to be set up in the "init

* SQL_TRACE + Enable/Disable SQL Trace for the instance.

Values -- TRUE Enable statistics to be collected for all sessions.

FALSE Disable statistics to be collected for all sessions.

* TIMED_STATISTICS + Enable/Disable the collection of timed statistics, such as CPU and elapsed times.

Values ------ TRUE Enable timing (we usually recommend this)

FALSE Default value.

* MAX_DUMP_FILE_SIZE : + Specifies the maximum size of trace files operating system blocks.

Values ------ The default value for this is 500 but if your trace file is truncated then increase this value.

* USER_DUMP_DEST: Specifies the destination for the trace file.

Values : The default value for this parameter is the default destination for system dumps on your operating system.

6.How to enable the trace for a session?

ALTER SESSION SET SQL_TRACE = TRUE;

ALTER SESSION SET SQL_TRACE = FALSE;

For timed statistics

ALTER SYSTEM SET TIMED_STATISTICS = TRUE;

ALTER SYSTEM SET TIMED_STATISTICS = FALSE;

7.how to set SQL Trace Facility for an Instance?

If the initialization parameter SQL_TRACE=TRUE, then statistics will be gathered for ALL sessions. If the facility has been enabled forthe instance, it may be disabled for an individual session by issuing bythe above SQL statement

TKPROF--Performance Tunning

This is one of the documents i had which i collected from metalink..a good one with clear examples...

If you have a system that is performing badly, a good way to identify problem SQL statements is to trace a typical user session and then use TkProfto format the output using the sort functions on the tkprof command line.

There are a huge number of sort options that can be accessed by simply typing 'TkProf' at the command prompt.

A useful starting point is the 'fchela' sort option which orders the output by elapsed time fetching (rememberthat timing information is only available with timed_statistics set to true in the "init.ora" file).

The resultant .prf file will contain the most time consuming SQL statement at the start of the file.

Another useful parameter is sys.

This can be used to prevent SQL statements run as user SYS from being displayed. This can make the output file much shorter an easier to manage.

Remember to always set the TIMED_STATISTICS parameter to TRUE when tracingsessions as otherwise no time based comparisons can be made.

A typical TKPROF out look like for select,insert,update,delete..

call count cpu elapsed disk query current rows

--------- ------------------------------------------------------------------------------------

Parse 2 221 329 0 45 0 0

Execute 3 9 17 0 0 0 0

Fetch 3 6 8 0 4 0 1

------------------------------------------------------------------------------- --------------

Let see the definition for each and every column

Interpreting TkProf Output Guidelines

call : Statisics for each cursor's activity are divided in to 3 areas:

Parse: statisitics from parsing the cursor.

This includes information for plan generation etc.

Execute: statisitics for the exection phase of a cursor

Fetch : statistics for actually fetching the rows

count : number of times we have performed a particular activity on this particular cursor

cpu: cpu time used by this cursor

elapsed: elapsed time for this cursor

disk: This indicates the number of blocks read from disk.

Generally you want to see blocks being read from the buffer cache rather than disk.

query : This column is incremented if a buffer is read in Consistent mode.

A Consistent mode buffer is one that has been generated to give a consistent read snapshot for a long running transaction.

The buffer actually contains this status in its header.

current: This column is incremented if a buffer found in the buffer cache that is new enough for the current transaction and is in current mode (and it is not a CR buffer).

This applies to buffers that have been read in to the cache as well as buffers that already exist in the cache in current mode.

rows: Rows retrieved by this step

What is my first step while tunning the performance of a query ?

It is better to use autotrace feature of SQL*Plus be used on statements rather than using TkProf mainly because the TkProf output can be confusing with regard to whether the Rule or Cost Based optimizer has been used. Because TkProf explain plan does not show any costs or statistics,

it is sometimes not possible to tell definitively which optimizer has been used.

That said, the following output from Tkprof explain plan is useful.

The Rows column next to the explain plan output shows the number of rows processed by that particular step.

The information is gathered from the STAT lines for each cursor in the raw trace output.

Remember that if the cursor is not closed then you will not see any output.

Setting SQL_TRACE to false DOES NOT close PL/SQL child cursors.

Cursors are closed in SQL*Plus immediately after execution.

Let's see some tkprof examples ..in the next post..

TKPROF-Peformance tunning(Example)

Example :

STEP 1 - Look at the totals at the end of the tkprof output===========================================================

OVERALL TOTALS FOR ALL NON-RECURSIVE STATEMENTS

call count cpu elapsed disk query current rows

-----------------------------------------------------------------

Parse [A] 7 1.87 4.53 385 [G] 553 22 0

Execute [E] 7 0.03 0.11 [P] 0 [C] 0 [D] 0 [F] 0

Fetch [E] 6 1.39 4.21 [P] 128 [C] 820 [D] 3 [F] 20

--------------------------------------------------------------------------

Misses in library cache during parse: 5

Misses in library cache during execute: 1

8 user SQL statements in session.

12 internal SQL statements in session

.[B] 54 SQL statements in session.

3 statements EXPLAINed in this session.

1. Compare [A] & [B] to spot over parsing.

In this case we have 7 parses for 54 statements which is ok.

2. You can use [P], [C] & [D] to determine the hit ratio.

Hit Ratio is logical reads/physical reads:

Logical Reads = Consistent Gets + DB Block Gets

Logical Reads = query + current

Logical Reads = Sum[C] + Sum[D]

Logical Reads = 0+820 + 0+3

Logical Reads = 820 + 3

Logical Reads = 823

Hit Ratio = 1 - (Physical Reads / Logical Reads)

Hit Ratio = 1 - (Sum[P] / Logical Reads)

Hit Ratio = 1 - (128 / 823)

Hit Ratio = 1 - (0.16)

Hit Ratio = 0.84 or 84%

3. We want fetches to be less than the number of rows as this will mean we have done less work (array fetching).

To see this we can compare [E] and [F].

[E] = 6 = Number of Fetches[F] = 20 = Number of Rows

So we are doing 6 fetches to retrieve 20 rows - not too bad.

If arrayfetching was configured then rows could be retrieved with less fetches.

Remember that an extra fetch will be done at the end to check thatthe end of fetch has been reached.

4. [G] Shows reads on the Dictionary cache for the statements.

- this should not be a problem on Oracle7.

In this case we have done 553 reads from the Library cache.

STEP 2 - Examine statements using high resourcee

===============================================

update ...

where ...

call------ count ---- cpu --- elapsed --- disk ----query --- current ----rows --

Parse-----1--------- 7 -----122 -------- -0----------0----------0-------------0---

Execute---1------- 75------ 461-------- 5----- [H] 297 -----[I] 3-------- [J]1---

Fetch ---- 0-------- 0------0-------------0--------0-------- 0------------ 0 ---

[H] shows that this query is visiting 297 blocks to find the rows

to update[I] shows that only 3 blocks are visited performing

the update[J] shows that only 1 row is updated.

297 block to update 1 rows is a lot. Possibly there is an index missing?

STEP 3 - Look for over parsing

select ...

call--------count------cpu-------elapsed------disk----query----current---- rows--

--------------------------------------------------------------------------------------

Parse------[M] 2 ----- [N] 221--- 329---------0-------45---------0--------- 0--

Execute---- [O] 3----- [P]9-------17----------0--------0--------- 0----------0--

Fetch------- 3----------6----------8-----------0-------[L] 4-------0-------- [K] 1

Misses in library cache during parse: 2 [Q]

[K] is shows that the query has returned 1 row.

[L] shows that we had to read 4 blocks to get this row back.This is fine.

[M] show that we are parsing the statement twice - this is not desirable especially as the cpu usage is high [N] in comparison to the execute

figures : [O] & [P]. [Q] shows that these parses are hard parses.

If [Q] was 1 then the statemnent would have had 1 hard parse followed by a soft parse (which just looks up the already parsed detail in the library cache).

This is not a particularly bad example since the query has only been executed a few times.

However excessive parsing should be avoided as far as possible by:

o Ensuring that code is shared:

- use bind variables - make shared pool large enough to hold query definitions in memory long enough to be reused.

UNIX Commands

UNIX Basic Commands

How to convert a file from dos mode to unix??

some time shell script developed in the window environment will create some problems because of carriage returns and other thing..those can be removed using the follwoing command

dos2unix

dos2unix movepdf

How to search files in unix??

find . -name "rc.conf" -print

This command will search in the current directory and all sub directories for a file named rc.conf.

Note: The -print option will print out the path of any file that is found with that name. In general -print wil print out the path of any file that meets the find criteria

How to search for a string in a selection of files (-exec grep ...).??

find . -exec grep "murthy ganjam" '{}' \; -print

If you want to just find each file then pass it on for processing use the -q grep option.

This finds the first occurrance of the search string. It then signals success to find and find continues searching for more files.

find . -exec grep -q "murthy ganjam" '{}' \; -print

This command is very important for process a series of files that contain a specific string.

You can then process each file appropriately.

How to view Files in UNIX??

use the cat command

cat filename

The more command will pause after displaying a page of text, and you can go on to the next page of text by hitting the space bar.

You can also do a keyword search of the text by typing

/keyword

For example, if you were looking through a file using the more command, and wanted to skip to the word "drosophila" you would type

/drosophila

and the more command would search through the file until it found that word.

LISTING FILES:

ls -a list all files, including the hidden ones

ls -g list which group owns the files

ls -lag list everything

ls *.txt list only files with a .txt on the end

ls data* list only files that start with the word "data"

ls -lrt list of all the files sorted

ls -la |grep '^d' Look only for files that are directories

ls -la |grep -v '^d' Let's only look for files that are not directories

COPY COMMAND:

cp -r * /tmp you would copy everything in the directory and RECURSIVELY (-r)

everything in the subdirectories underneath that directory to the /tmp directory.

cp file1 file2 copy file1 to a file called file2

cp file1 /tmp copy file1 to the /tmp directory

cp file1 ~smith copy file1 to the home directory of "smith"

cp * /tmp copy everything in the directory to the /tmp directory

MOVE COMMAND:

mv file1 file2 rename file1 to the name file2

mv file1 /tmp move file1 to the /tmp directory

mv file1 ~smith move file1 to the home directory of "smith"

mv * /tmp move everything in the directory to the /tmp directory

mv dir2 /tmp move the directory called dir2,and everything in it, to the /tmp directory

There is no rename command in unix use this command to rename...

Remove Command:

rm * delete everything in a subdirectory

rm *.txt remove only files with a .txt on the end

rm data* remove only files that start with the word "data"

rm -r dir2 removes everything in the subdirectory "dir2"

CD Command:

cd change to your home directory

cd .. move up one level

cd ~applmgr change to the home directory of user "smith"

cd /tmp change to the /tmp subdirectory

Shell script for File Movement between Servers

the system should get the files and process them

for achieving this we need to have shell script which will connect to the server and ftp the files to the source server..

Plz find the sample script for doing this..

after writing the script we have to schedule the script in unix using a cronjob...

--Shell script starting

#! /usr/bin/ksh

HOST=egltest.test.co.in

USER=applprod

PASSWD=applprod

chmod -R 777 /egltestarch1/oracle/EGLTEST/test/coa/*.txt

exec 4>&1

ftp -nv >&4 2>&4 |&

print -p open $HOST

print -p user $USER $PASSWD

print -p bin

print -p lcd /egltestarch1/oracle/EGLTEST/test/coa

print -p cd /egltestapps1/home/test/coa

print -p mget *.txt

print -p bye

exit 0

--Shell script ending name it as ftpcode.sh

this shell script is saved in /egltestarch1/oracle/EGLTEST/dba/sh/ftpcode.sh

After writing the script we need to a cronjon entry..this entry will run the script on the schedule we have given..

for this

crontab -e

using vi editior commands to make this entry below..this will run the script every minute and log is the written to the file specified on the right side...

# ftp files from application server to database server

* * * * * /eglprodarch1/oracle/EGLPROD/dba/sh/ftpcode.sh > /egltestarch1/oracle/EGLtest/dba/log/ftpcode.log 2>&1

once the files are dowloaded make sure to delete or move to other folders to automate the FTP process...

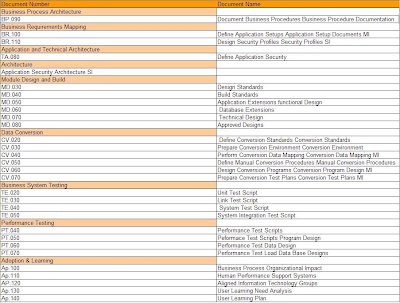

Oracle Apps Implementation

I think you people might have come across different best practices and some amazing workarounds.

I request all of you to provide me your valuble inputs to put a road map for a ideal implementation.

From now onwards I will be sending different best practices and Process so that we can evalutate the pros and cons of each.

First of all let me list different techonologies and tools required at different stages of implementation.

From now onwards we can evaluate each technology and tool for different types of situations.

Data loading stage:

1.sql loader

2.GUI tools like DATA loader

3.Oracle External Tables(from 9i)

4.WebADI

User interface Development:

1.Forms6i

2.OAF(Jdeveloper) Reporting Tools:

1.Reports Builder

2.XML Publisher

3.PL/SQL Reports

4.Oracle Discoverer

Business logic implementation:

1.Work Flow

2.AME(Approval's Management) Outbound interfaces:

1.EDI

2.XML Gate way

3.Custom made outbound interfaces using PL/Sql

Inbound Interfaces:

1.Open Interfaces

2.API’s

3.EDI

4.XML Gateway(Not Sure)

Others:

1.Oracle Alerts

2.OAF Personalization

3.Forms Personalization

Tools:

1.TOAD

2.SQL Client

3.PL/SQL Developer

Code Formatting Tools:

1.Formatter Plus

2.PL/SQL Developer Formatter Configuration Tools:

1.VSS

2.CVS

1. keep the source of all Customized objects(Tables,Packages,Procedures ..) in the PLS folder in the custom top.

It is easy to say but making people to follow it is very difficult(Even i don't :-) ).One way to make people to follow is by dropping objects whose lastest source is not present in the UNIX box.

we need to compare the last update date in the UNIX box and last_modified date in the all_objects...i witnessed this idea really working..This also removes the Cost factor of maintaining a VSS(VSS might have its own Advantages) also...

2.All Customized objects should be labeled.Example XXLGEOM2309.and the name of the object should be decided prior to the development

Lets say for example u have a Custom process involving a concurrent program,a form and a workflow.

For each object if u have one lablel right at the design phase all the documets can have a process design showing different components getting involved at differnt stages in the process.

3.A Repository of all the process and all the objects associated with it should be maintained.Thats what MD70 should contain...But most of the times it wont..they are always some missing objects.

we will realize the importance of it when the people starts leaving the company during implemenataion..

Functional:

1.Its always better to identify one process owner From the customer side for each process or Area.

Even though it is not with in our control the management should insist for it from the starting because it clears a lot of hurdles during implementation

2.All Functional people should have a fair idea of all the technologies used . they need not be experts but aleast they should understand the usages of it,its advantages&limitations .

(Guys with a little idea straightaway dont start urself giving techincal solutions..use the knowlege to understand what's happening..in future after enought experience u may....)

This will help u a lot when u r designing solutions...

Technical:

Let us discuss the reports..

1.The most common custom objects we see in any normal implentation(10-20% Customization) is Reports.

I personally feel the layout designing of the report should be the in the last stage of the implementation.Because the 90% of the Customer concern will be the data that he will get to analyze and 10% will be the Format it is presented.

so it is better to show the user the raw data every time using the tools like toad.Once user is happy with the data just have the sql and keep it aside.This gives us the flexibility of adding a column or removing it during the process development

Because adding a column or removing a column in tools like report builder takes a considerable amount of time.

It doesn't remove total rework but alteast reduces it considerably..

First let me list different types of reports from customer perspective..

1.Reports Presented to Authorities and top management--Look & Feel and the layout are important

2.Reports used for analyzation purpose and day-to-day operatons--like daily Shipping details report and Shipping lead time)

3.Reports Used for Record purpose--Keeping the data aside

4.MIS Reporting--Summary reports ,Report used to tally data from differnt applications in apps--Perfomance is pretty important as they run on huge data .

Let me list different reporting tools and some of their advantages

Report Builder:

Advantages:

Good for Complex layouts involving images and for Pre -Printed Document

Developer will have more control on the layout

Many Technical People knows very much how to use it

Disadvantages:

Report building time is Relatively High

Handling small layout changes is even more difficult

Data & Layout are combined into one unit--If I want to see same data in 3 different layouts we have to design three reports

PL/SQL Reports

Advantages

Easy to Build and maintain

Just PL/sql Knowledge is Enough

Should be used for analyzation reports and day-to day operation Reports

Disadvantage

Can handle only very simple layouts

XML Publisher

Advantages

Replacement for Report Builder

Can handle Complex layout also

Layout Design can be done in Microsoftword,XSLT --Compartively Easy

We can have one report with different layouts and layout can be selected at run time

I heard even we can get single report in multiple layout by running it only one time--Not sure as I have not used it

Disadvantages:

Only available in after 11.5.9(with a patch),11.5.10 onwards

Requires sound knowledge of microsoft word tables and word feautures

Many people still don’t have much idea on it..

Some time output goes wavierd on hand experience is required to find workarounds--(My own Experience)

Discoverer Reports

Advantages:

Multi Dimension analysis

Easy to build and use

Disadvantages:

Separate License

No formatting options at all..can be used only for analysis not for reporting

Best Practices:

1.Always have the rightly balanced teams.

A typical ratio for medium complexity process would be(1:1:2 )(Functional:Technical Designer:Developers).

Techical designer should be a guy who understands both the importance of business and technical limitations.

And the rule should be , technical designer should always be involved at the Functional Discussions with the customer.

This always help for not committing things which are not technically feasible..(Nothing is impossible ..but the effort needed to make it happen is worth or not??)

2.The other big Question is how should I divide my project team???

Method A>Functional,Technical( With in technical people by technologies they are good at like ..reports ,Forms & OAF ...)

Method B> One Process one team.(Atleast team size should be more than 20 to implement this)

There are advantages & disadvantages of both the ways..I am part of implementations which are done both ways....Lets me list some of them...

Method A:

Advantages: Less time is taken for development of components..(As technical people are doing what they are good at..)

Disadvantages:

1.I don't know whether I am correct or not in quoting this but the truth is you will find a clear divide between the two teams..And once things start going wrong people start blaming each other...I think every one might have experienced it already..

2.For technical people it is even bigger loss because you work on different components belonging to different process and never understand how your component fits in the overall solution. At the end of day it will be just a

piece of code...

3.Any change in the process during the course of development is very difficult to handle, as there will be inter dependencies among components

Method B:

Advantages:

1.Every one will feel the ownership of the process and better team spirit.

2.Technical team will also have better understanding of the processes and will able to implement the changes faster (as In a development project change is unavoidable ) as they have all the components with them

Disadvantages:

1.Development time might be a bit long as the technical people in u r team might not have expertise in all the technologies involved in the process

My views might be a bit biased as I am strong supporter for method B..

Technical:

Coming to our today's technical discussion ...data loading..This will be one of the first steps(development) and the last step(before live) of a implementation..

The typical way of data loading is a three step process.

1.To load data from the legacy/Flat files to temporary tables.

2..Perform all the validations on the temporary table data and load it to the open interfaces or API's

3.Run the Open interface Concurrent programs/API's to load data to the standard tables.

First let me figure ways of data movement in oracle apps..

1.Open Interfaces-- used for live interfaces(every day activity) & one time loading

2.API's--Almost same as Open interfaces but are easy to handle.(validation & integrity logic is taken care by them)

3.Out Bound Interfaces--Required if we integrate oracle apps with third party or legacy systems

4.EDI--automation process..we will talk about them later

For loading data

SQL Loader:

1.Used when data is available in flat files(tab delimited,Comma delimited)

2.Faster & Easier way of loading data

3.Can use sql functions to modify data

DB Links: if the legacy systems is on oracle data base the best thing is to get access to the data customer want to import through db links

Data loader tool : These are third party tools used for loading ,which automates the process of punching data.There are very much user friendly and not much technical expertise is required to work with them.

But the biggest disadvantage of these tools is they are slow.If you have huge data it is not advisable to use them(unless u r patient enough to see thru :-) )

The data loaded will be valid because it is as good as manual punching..

XML: Oracle Provides Apis to import XML data and to export data into XML.This should be most convenient way for data interaction as most of the other technology systems are able to parse XML data easily.

There are some limitations(can be easily overcome) also for these in oracle like while importing XML data into oracle tables oracle can't parse huge files

WEBADI: These are oracle provided templates for data loading into some standard interfaces.Easy to use. we can create the custom api's and use for data loading..i felt this is one of the best ways of loading data

UTL_FILE: it is PL/SQL way of loading data into oracle tables.This packages gives us the API to read and data into flat files.This method is very useful when the data to be loaded is less and more validations are required

before loading.One of the limitations of this pacakge it reads data in lines and the maximum length it can read is 1022 charecters..In writing data to files it can write only 32K in one shot..later we need to close the file

and reopen it again..

External Table:This concept concept comes from Oracle 9i onwards.This is one of the easiest way of loading data.Once you create the external table you can simple use the select stament to query the table.

On performance basis this is as good as Direct path loading of SQLLDR.(Technical People Give a try for this...)

Caution:Disable any indexes on the table before loading data..other wise it will slow down the process of loading data.

On summary for all conversions(one time data movement) use External Tables or SQL Loader

For interfaces use PL/sql,dblinks or XML

HI all i have not used SQL loader much..most of the time i have used External tables ,UTL_FIle and XML.So people who has much exposure can come up with any limitations or advantages of it..

Plz let me know if any thing is wrong or any other suggestions to make this better....